Introduction

Over the past few months, I’ve had the privilege to present Kubernetes Autoscaling to various audiences. At copebit, we build well-architected environments for our customers every day. A significant part of our current projects involves migrating applications to containers and hosting them on Kubernetes, specifically using AWS EKS (Elastic Kubernetes Service).

Building Well-Architected Environments

When creating environments like these, our process begins with thorough discovery sessions to analyze the requirements. These sessions ensure we understand the client’s needs before we design and build the AWS infrastructure accordingly.

Case Study: Sly’s Velox E-Commerce Solution

Recently, we built a solution for Sly’s Velox e-commerce platform. Here’s how we approached the project:

- Design Phase: We designed the overall solution architecture.

- Containerization: We containerized the application.

- Infrastructure Building: We built the necessary AWS infrastructure.

- Deployment: We deployed the application in an elastic and autoscaled manner on AWS EKS.

Velox Application Architecture

The Velox application, as depicted above, is hosted containerized on AWS EKS. It interacts with a MySQL-based database, DocumentDB with MongoDB, Redis for caching, and OpenSearch for search functionalities. These components are all managed with native AWS services for backup, monitoring, security, and logging, creating a robust enterprise-level solution.

Autoscaling with Karpenter

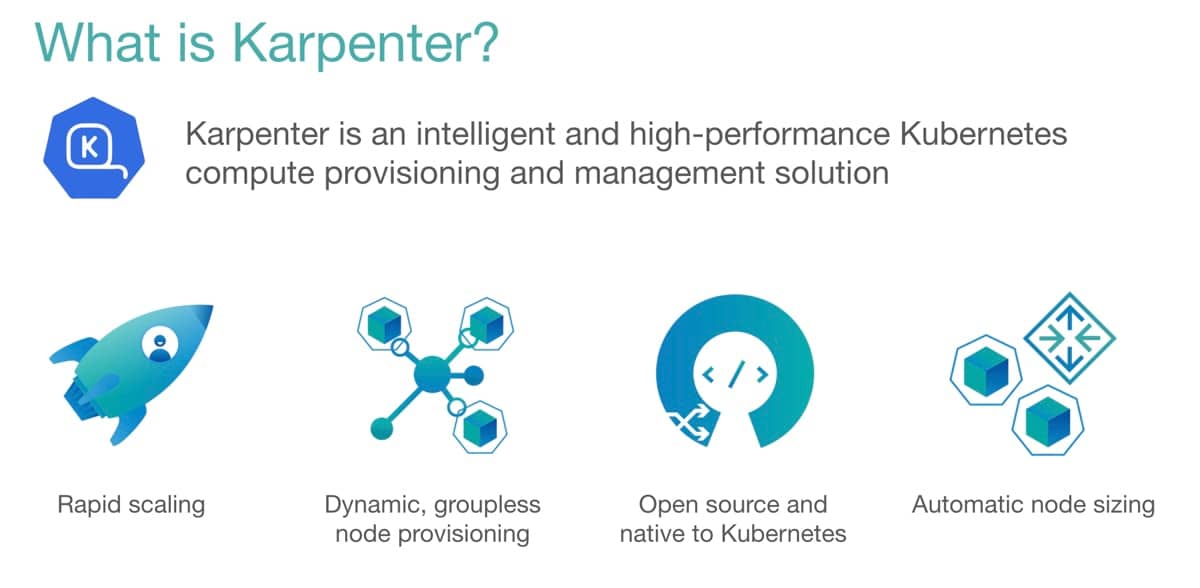

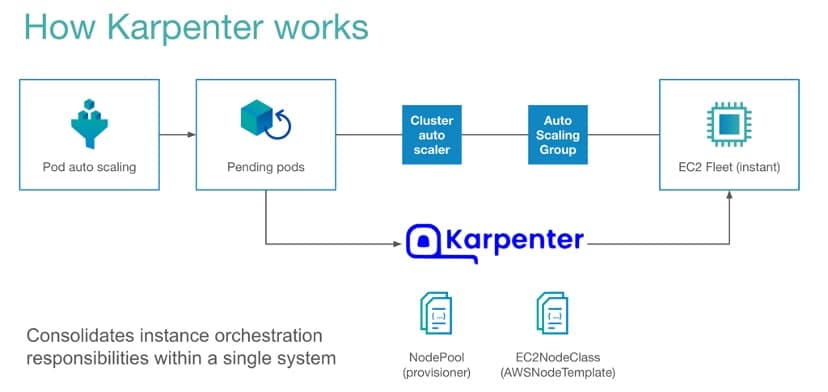

To efficiently scale applications on AWS EKS, we use Karpenter. Karpenter is an open-source autoscaling solution that works exceptionally well with Spot Instances. This setup includes an elastic database using Aurora Serverless V2 and elastic worker nodes managed by Karpenter.

Benefits of Karpenter

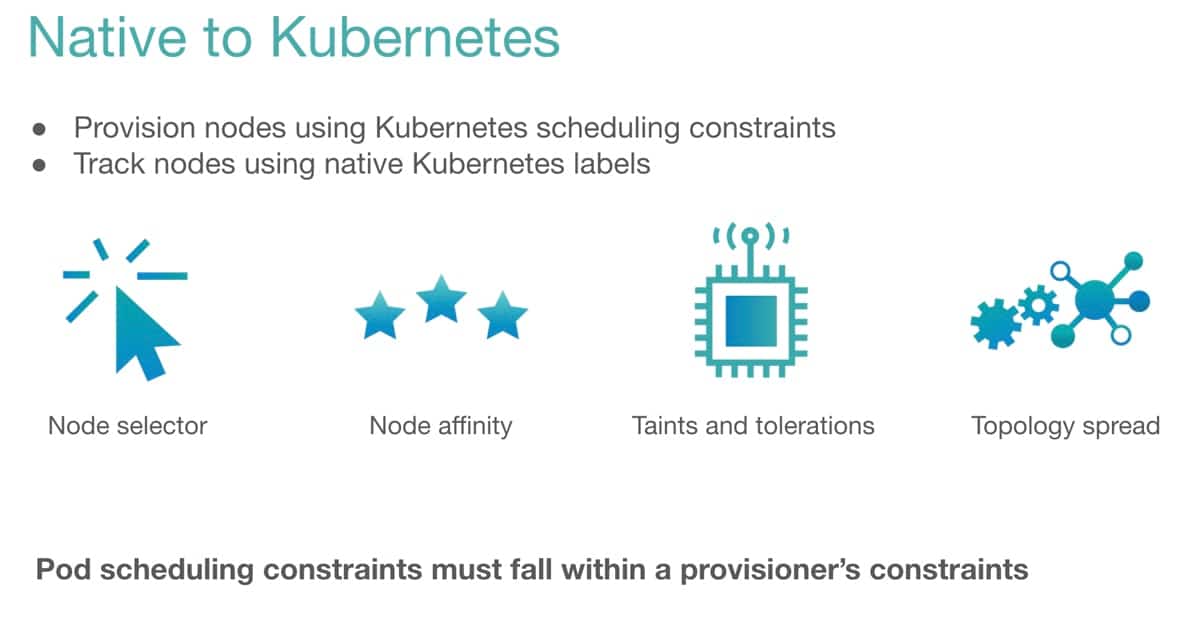

Karpenter integrates seamlessly into the Kubernetes ecosystem, using Kubernetes APIs and custom resource definitions (CRDs), and can be managed with standard Kubernetes tools like kubectl. Its benefits include:

- Rapid Scaling: Quickly responds to scaling events.

- Cloud-Native Integration: Functions as a native Kubernetes element.

- Open Source: Freely available and community-supported.

- Spot Instances Compatibility: Optimizes costs with Spot Instances.

- Secure and Reliable: Ensures robust security and reliability.

Demonstrating Karpenter in Action

Scaling Event Simulation

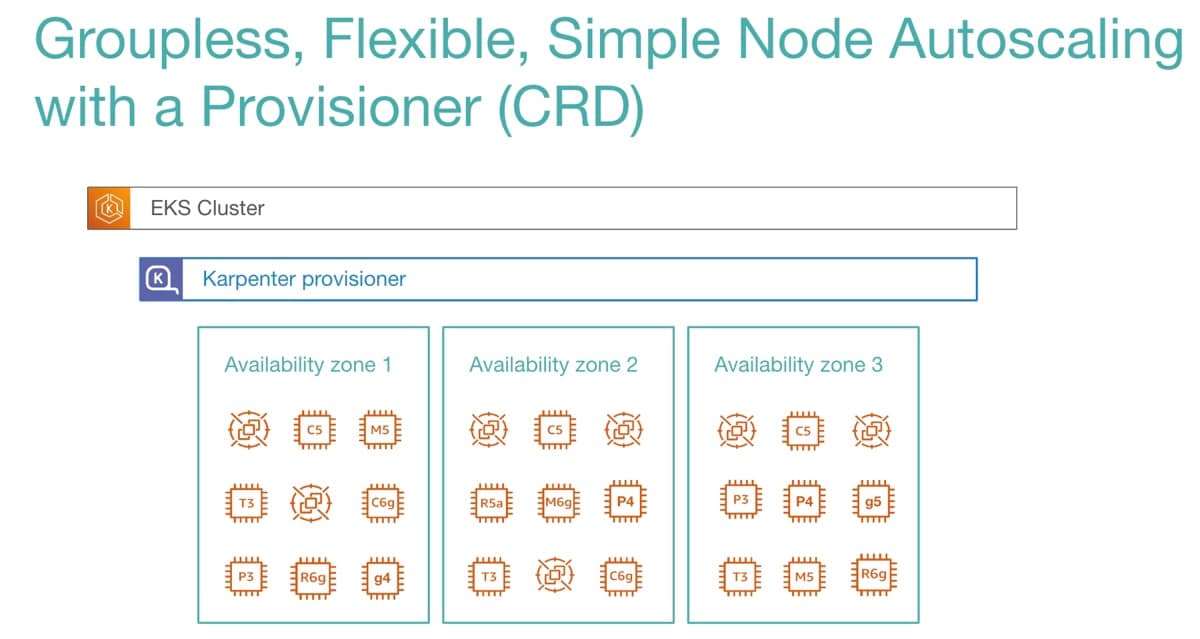

In this demonstration, we set up a Kubernetes cluster with Karpenter installed. We configured node pools and provisioners to handle scaling events. When a new application is deployed and requires additional capacity, Karpenter swiftly launches the necessary instances.

Using an inflate deployment, which consumes one CPU per pod, we launched an odd number of pods to illustrate how Karpenter selects the appropriate instances. The demonstration showed how Karpenter launched a new instance within about 45 seconds, efficiently handling the pending pods.

Consolidation Feature

Karpenter’s consolidation feature optimizes resource utilization. If instances are underutilized (e.g., an 8 CPU instance is only partially used), Karpenter consolidates workloads to maximize efficiency. This reduces costs and infrastructure overhead.

In another demo, launching multiple instances fragmented the resources. Karpenter quickly recognized the idle capacity and consolidated the instances into a better-suited configuration, demonstrating cost savings and improved infrastructure management.

Advanced Features of Karpenter

Karpenter is aware of Availability Zones (AZs) and can launch instances in specific AZs to meet workload requirements, such as satisfying PVCs (Persistent Volume Claims) attached to specific AZs. This speeds up node provisioning and simplifies EKS management.

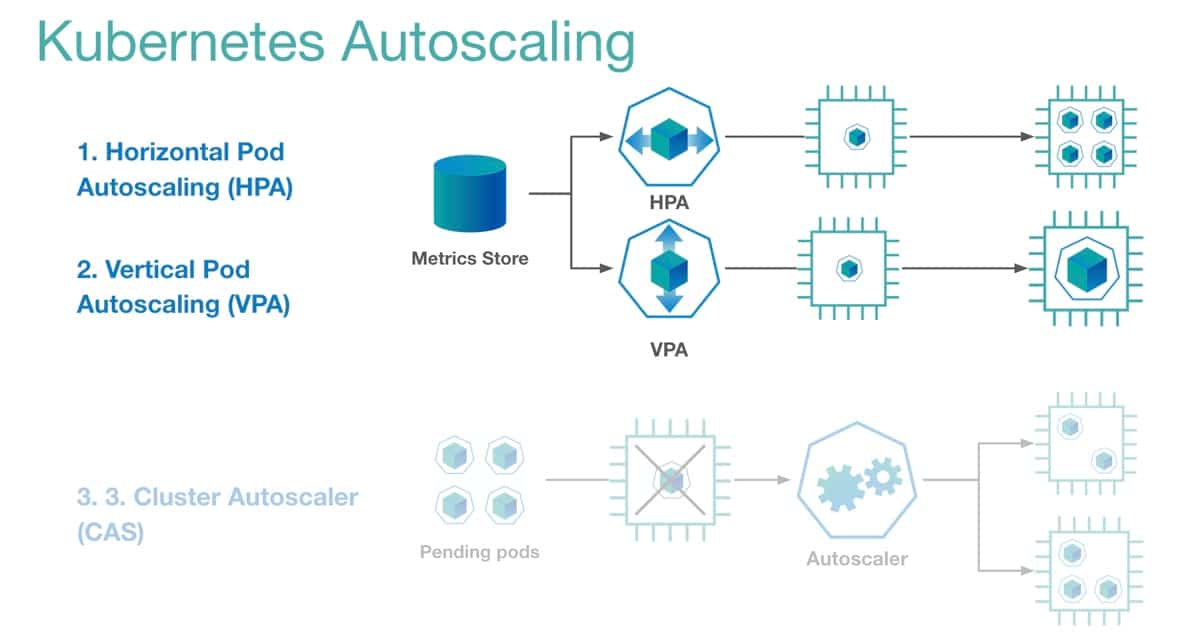

Pod Scaling Integration

Karpenter works seamlessly with Horizontal Pod Autoscaling (HPA) and Vertical Pod Autoscaling (VPA). Good pod scaling policies combined with Karpenter’s node management ensure optimal performance and resource utilization.

In a stress test using Apache Bench on an nginx deployment with HPA configured, we observed how Karpenter handled the scaling dynamically. As CPU load increased, HPA added more pods, and Karpenter launched new worker nodes to accommodate the load. Once the load decreased, HPA scaled down the pods, and Karpenter removed the excess worker node capacity.

Conclusion

Karpenter is a powerful solution for Kubernetes autoscaling, offering speed, efficiency, and cost-effectiveness. It can be fully deployed using Terraform or in a GitOps style with tools like Flux or Argo. At copebit, we have successfully deployed Karpenter multiple times for various clients, utilizing Spot Instances to achieve significant cost savings and improved performance. Karpenter is a first-class citizen on Kubernetes and offers fantastic node and capacity management including spot instances and node constraints.

If you’re looking to enhance your Kubernetes infrastructure with advanced autoscaling capabilities, give Karpenter a try. For expert assistance, reach out to copebit. Our extensive experience ensures a smooth and efficient deployment, tailored to your specific needs.