At copebit, we specialize in constructing elastic infrastructure solutions, leveraging cloud-native technologies to optimize performance and cost-efficiency for our clients. Our core expertise lies in containerization with Kubernetes (especially AWS EKS), and implementing scalable solutions for compute resources, caching, and databases. We design these environments with a focus on the AWS well-architected frameworks.

Building Well-Architected Environments

When creating environments like these, our process begins with thorough discovery sessions to analyze the requirements. These sessions ensure we understand the client’s needs before we design and build the AWS infrastructure accordingly.

Potential Applications of Elastic Infrastructure

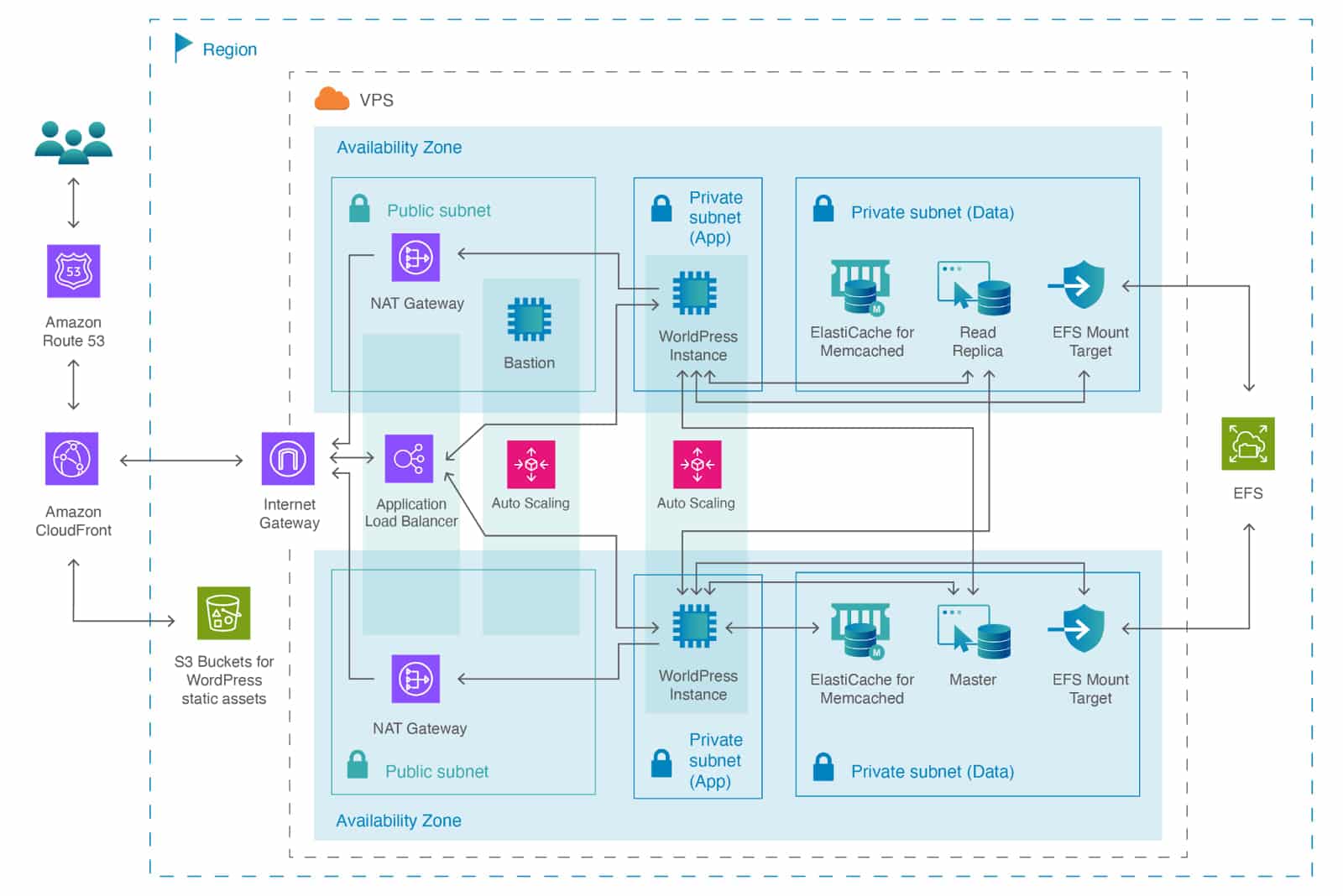

The provided image depicts an example AWS environment hosting a PHP application on virtual machines. This setup utilizes various AWS services including EC2 Autoscaling, Aurora Serverless v2, and Elasticache Serverless, all of which can be configured to dynamically scale resources in response to application, cache, and database demands.

AWS has simplified the creation of such elastic infrastructures, offering a wide range of autoscaled managed services. This eliminates the complexities of coordination, application-specific knowledge, and maintenance that were previously associated with building and managing such environments. With AWS and infrastructure as code, it is now possible to quickly deploy and manage scalable and elastic environments.

What is AutoScaling

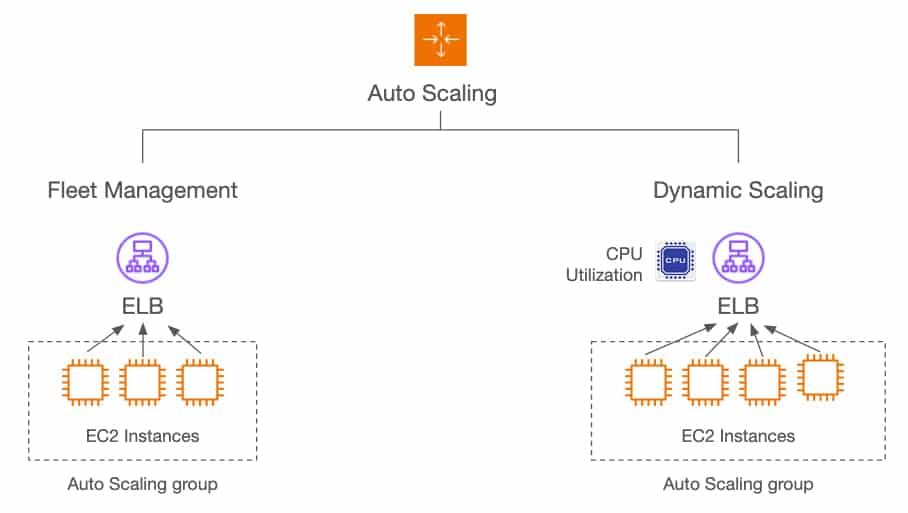

Autoscaling groups systems together and scales them based on demand. A load balancer distributes traffic across instances, and autoscaling intelligence measures demand (CPU, memory, or connections on the load balancer). Scaling policies then adjust the number of instances or resources.

Autoscaling on EC2

EC2 Autoscaling is an effective tool for scaling virtual machines. It seamlessly integrates with EC2 instances, Auto Scaling Groups, Launch Templates, and Load Balancers to deliver a comprehensive autoscaling solution for your applications. However, it’s important to remember that your application must be designed to support autoscaling. Ideally, it should adhere to the 12-Factor App methodology, which recommends against storing state on the instance itself.

Find below some sample Terraform code of a scaling policy to scale EC2 based virtual machines.

# CloudWatch Alarm for Scaling Up

resource "aws_cloudwatch_metric_alarm" "scale_up" {

alarm_name = "cpu-high-alarm"

comparison_operator = "GreaterThanThreshold"

evaluation_periods = 2

metric_name = "CPUUtilization"

namespace = "AWS/EC2"

period = 60

statistic = "Average"

threshold = 60

alarm_description = "Scale up when CPU > 60%"

actions_enabled = true

dimensions = {

AutoScalingGroupName = aws_autoscaling_group.example.name

}

alarm_actions = [aws_autoscaling_policy.scale_up.arn]

}

# Scaling Policy for Scale Up

resource "aws_autoscaling_policy" "scale_up" {

name = "scale-up-policy"

scaling_adjustment = 1

adjustment_type = "ChangeInCapacity"

cooldown = 300

autoscaling_group_name = aws_autoscaling_group.example.name

}

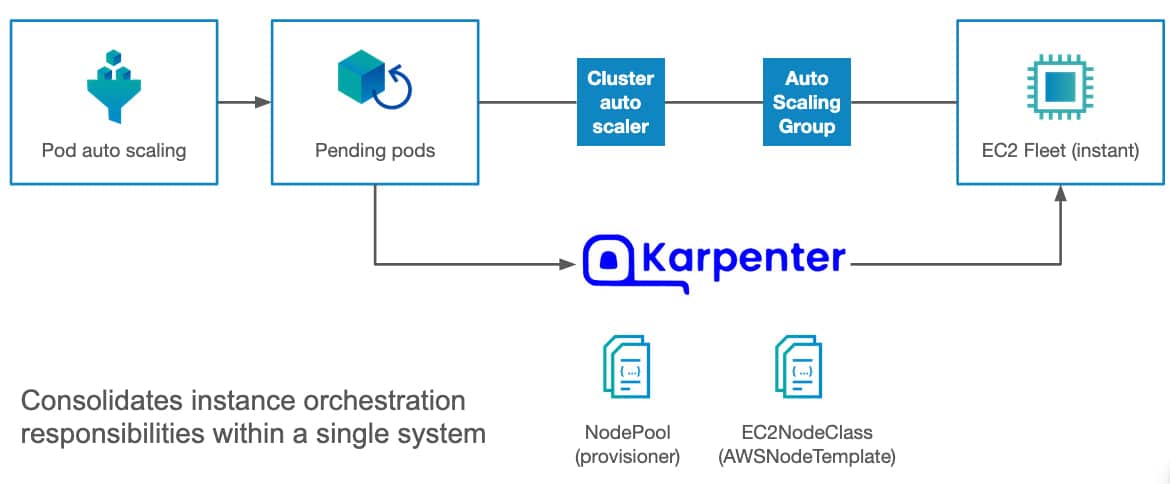

Autoscaling on Kubernetes

To efficiently scale applications on AWS EKS, we use Karpenter. Karpenter is an open-source autoscaling solution that works exceptionally well with Spot Instances. This setup includes an elastic database using Aurora Serverless V2 and elastic worker nodes managed by Karpenter.

Benefits of Karpenter

Karpenter integrates seamlessly into the Kubernetes ecosystem, using Kubernetes APIs and custom resource definitions (CRDs), and can be managed with standard Kubernetes tools like kubectl. Its benefits include:

- Rapid Scaling: Quickly responds to scaling events.

- Cloud-Native Integration: Functions as a native Kubernetes element.

- Open Source: Freely available and community-supported.

- Spot Instances Compatibility: Optimizes costs with Spot Instances.

- Secure and Reliable: Ensures robust security and reliability.

Find below some code snippets to see how you can configure a Karpenter nodepool in Kubernetes:

apiVersion: karpenter.k8s.aws/v1beta1

kind: NodePool

metadata:

name: karpenter-nodepool

spec:

template:

spec:

requirements:

- key: "node.kubernetes.io/instance-type"

operator: In

values: ["m5.large","m6i.large","m6a.large"]

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "example-cluster"

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: "example-cluster"

amiFamily: AL2

}

Demonstrating Karpenter in Action

See here the details in our last blog post including Demo Videos:

https://www.copebit.ch/en/kubernetes-autoscaling-with-copebit-transforming-cloud-native-solutions/

How to scale Databases

AWS has offered various types of elastic databases for several years: NoSQL with DynamoDB, SQL with MySQL and Postgres, and GraphDB with Neptune. NoSQL has been relatively easy to scale and offers good elasticity. SQL has had very good auto-scaling support since the introduction of Aurora Serverless v2 for Postgres and MySQL.

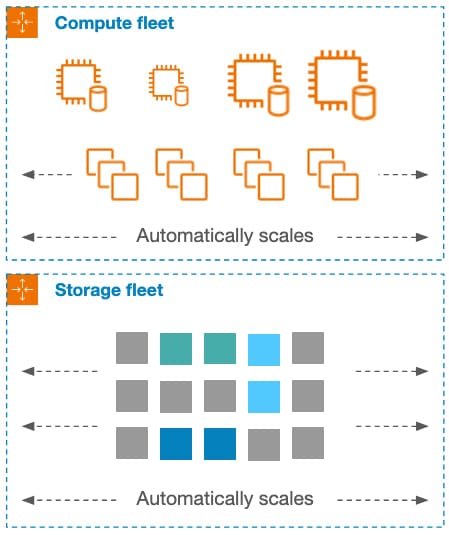

Elastic SQL Databases

Aurora Serverless v2, which we use for all customers who need SQL and use Postgres or MySQL, is an excellent service.

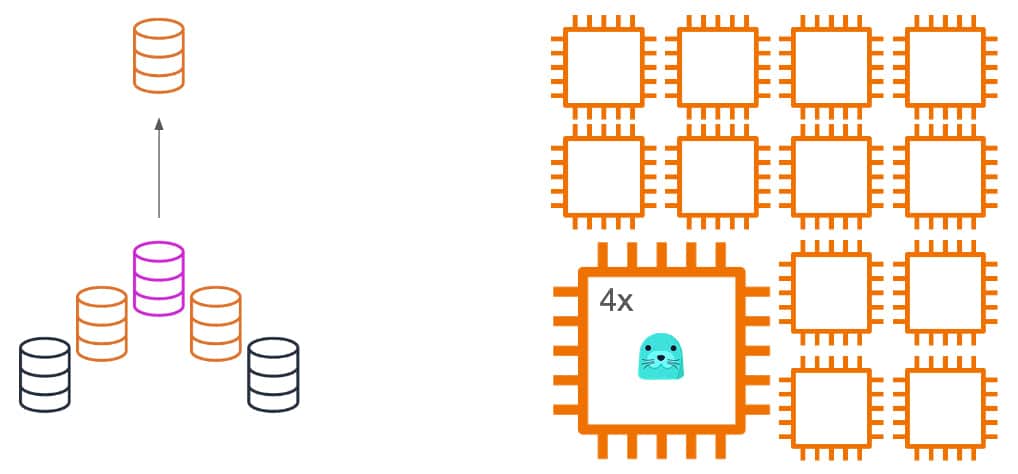

Aurora has separate compute and storage layers. Storage has been scaling for space for years, and now you can also scale IO. Since Serverless V2, compute scaling has been very good, scaling up and down very rapidly. In the best case, Serverless V2 can scale up within 100ms using the new Caspian technology for hot-adding CPU and memory to a running instance. Scaling down still requires finding a short swap-window but is also fully automatic.

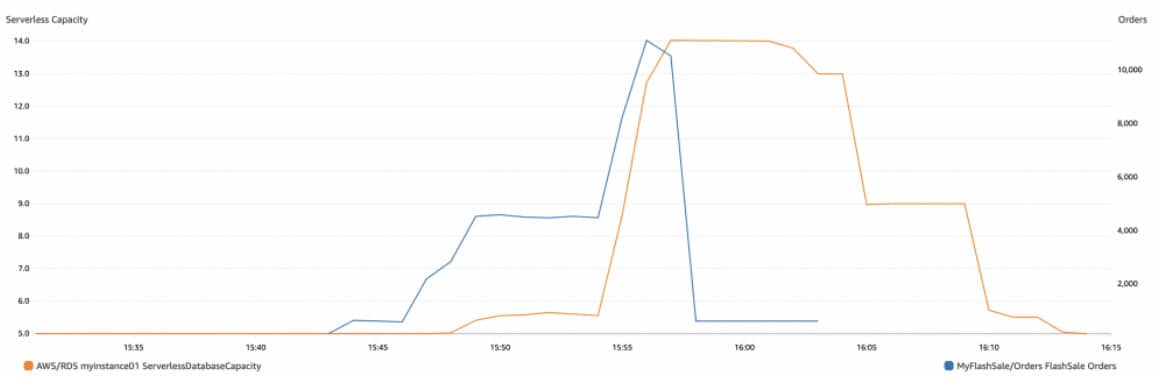

The image illustrates how the infrastructure scales to meet the database demand. The blue line represents the actual end-user demand on the database, while the orange line shows the scaling of the underlying Aurora instance.

Database scaling Demo

This video demonstrates the seamless and fully elastic scaling of Aurora serverless v2. The database scales up rapidly in response to increased demand, and scales down automatically a few minutes after the load subsides.

We use the sysbench utility, running on an EKS AutoMode cluster with an active Nodepool, to simulate load. The sysbench utility launches 100 threads that send numerous requests to the database. Within a few dozens of seconds, the capacity section of the metrics shows that the database has automatically scaled.

The video also shows how scaling down occurred seamlessly in a previous test without any manual intervention.

Find below some sample Terraform code to deploy an Aurora Serverless v2.

provider "aws" {

region = "us-east-1"

}

variable "db_password" {

description = "Database master password"

type = string

sensitive = true

default = "" # Use environment variable instead

}

resource "aws_rds_cluster" "aurora_serverless_v2" {

cluster_identifier = "aurora-serverless-v2"

engine = "aurora-mysql"

engine_version = "8.0.mysql_aurora.3.04.0"

database_name = "mydatabase"

master_username = "admin"

master_password = var.db_password

serverlessv2_scaling_configuration {

min_capacity = 0.5

max_capacity = 4

}

storage_encrypted = true

skip_final_snapshot = true

}

resource "aws_rds_cluster_instance" "aurora_instance" {

cluster_identifier = aws_rds_cluster.aurora_serverless_v2.id

instance_class = "db.serverless"

engine = aws_rds_cluster.aurora_serverless_v2.engine

}

output "cluster_endpoint" {

value = aws_rds_cluster.aurora_serverless_v2.endpoint

sensitive = true

}

Caching Scaling

Elasticache Serverless, a fully managed service for caching with MemCache or Redis, has been available for approximately a year. It leverages AWS’s Caspian Technology to dynamically add CPU and other resources, enabling instant scaling of the underlying instances. Additionally, sharding can be used with Redis to create a cluster capable of scaling to even greater levels. Elasticache Serverless simplifies cache cluster management and offers faster and more efficient scaling compared to instance-based scaling, supporting both Redis and Memcache for various applications.

Scaling-up happens almost instantly, but scaling-down, similar to Aurora, requires replacing the underlying instance, since utilized memory cannot be removed from a service.

Summary of elastic AWS services

- Compute

- Virtual Machines with EC2 AutoScaling

- Containers with ECS AutoScaling

- Containers with Karpenter on EKS

- Serverless with Lambda

- Databases

- NoSQL with DynamoDB AutoScaling

- SQL with MySQL and Postgres on Aurora Serverless v2

- Graph Databases with Neptune

- Caching with Elasticache Serverless

- Search with OpenSearch Serverless

- Messaging with Kafka MSK Serverless

This section provides an overview of the elastic services that are currently leveraged successfully at copebit.

Conclusion

At copebit, we’re all about building cloud infrastructure that’s both scalable and cost-effective. We’re big fans of Kubernetes and other cloud-native technologies on AWS – they’re game-changers!

We kick things off by getting to know your specific needs. Then we leverage awesome AWS services like EC2 Autoscaling and Aurora Serverless to design and build a solution that’s just right for you. The best part? You get an elastic system that’s super easy to manage.

We take the complexity out of building and managing cloud infrastructure so you can focus on what matters most – your business!

Let us help you optimize your cloud infrastructure and achieve your goals!